RAG in JavaScript: How to Build an Open Source Indexing Pipeline

A Step-by-Step Guide to Implementing Retrieval-Augmented Generation Using JavaScript and Open Source Models

This article is part of a series exploring how to implement Retrieval-Augmented Generation (RAG) in JavaScript using open-source models like Meta-Llama-3.1–8B, Mistral-7B, and more from Hugging Face.

In this article, we’ll cover the following key concepts:

- What is the Indexing Pipeline?

- Chunking Text with LangChain

- Understanding Embeddings with Real-Life Examples

- Creating Embeddings with Node-Llama-CPP

- Using a Vector Database with Supabase

RAG in JavaScript Article Series:

- How to Build an Open Source Indexing Pipeline

- Implementing the Generation Pipeline with Open Source

- Developing a User-Friendly React Interface for Document Uploads and Querying

- Optimizing and Evaluating Retrieval in RAG

Which knowledge you need for this tutorial

- Basics of Node.js

- Basics of SQL

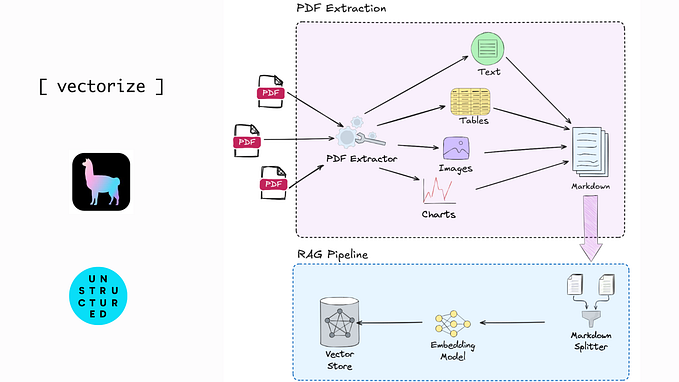

By the end of this article series, you will fully understand the process shown in the following diagram.

Indexing Pipeline

What is the Indexing Pipeline?

The indexing pipeline is a process that prepares and organizes information so that it can be quickly and easily searched later. Think of it like a library:

- Collecting Information: Just like a librarian collects books, the indexing pipeline takes various documents or data (like company handbooks, articles, etc.).

- Breaking it Down: The pipeline then breaks these documents into smaller pieces, called chunks. This is like taking a big book and dividing it into chapters or sections, making it easier to find specific information.

- Creating Representations: For each chunk, the pipeline creates a representation called an embedding. This is like making a summary of the chunk that captures its main ideas. These embeddings help computers understand the content better.

- Storing the Information: Finally, the chunks and their embeddings are stored in a database. This is like putting the books on the right shelves in the library so you can find them quickly later.

When someone asks a question, the system can quickly search through these organized chunks to find the most relevant pieces of information.

Chunking Text with LangChain

How can we prepare a large document for AI by splitting it into smaller pieces and creating embeddings for each piece? We’ll use tools like LangChain and vector databases to handle these tasks.

If you have a very large document, like a company handbook, you’ll want to split it into smaller chunks before creating embeddings. For example, imagine an internal employee portal that helps staff find information on HR procedures, benefits, and workplace guidelines. Instead of searching through a huge handbook all at once, the app can split the handbook into smaller sections (chunks) and then find the relevant chunk when an employee asks a question.

For example:

We will use this example text and save it into a file handbook.txt:

Company Handbook

1. Introduction

Welcome to [Company Name]! This handbook is designed to provide employees with essential information regarding company policies, benefits, and procedures. It serves as a guide to help you navigate workplace expectations and make the most of the resources available to you.

2. Workplace Guidelines

2.1 Attendance and Punctuality

Employees are expected to be present and ready to work during their assigned hours. Consistent tardiness or absenteeism may result in disciplinary action.

2.2 Code of Conduct

All employees must adhere to the highest standards of professionalism, respect, and integrity. Discrimination, harassment, or any form of misconduct will not be tolerated.

2.3 Dress Code

Our dress code is business casual, except on Fridays, which are designated as casual days. Clothing should be neat and appropriate for a professional environment.

3. Procedures

3.1 Requesting Time Off

Employees must submit time-off requests at least two weeks in advance through the company's HR portal. Approval is based on staffing needs and seniority.Document: The handbook contains various procedures, benefits, and workplace guidelines.

- Chunk 1: Security procedures → Embedding for this chunk

- Chunk 2: Health benefits → Embedding for this chunk

- Chunk 3: Vacation policies → Embedding for this chunk

- Chunk 4: Workplace safety guidelines → Embedding for this chunk

- Chunk 5: Leave policies → Embedding for this chunk

- …

- Chunk n: Other workplace guidelines → Embedding for each chunk

Before running the code locally please setup a node.js project and install the dependencies:

# Create a new directory for your project

mkdir rag

cd rag

# Initialize the Node.js project

npm init -y

# Install the necessary dependencies

npm install @supabase/supabase-js@^2.45.4 dotenv@^16.4.5 langchain@^0.3.2 node-llama-cpp@^3.0.3Here’s how you could split a document into chunks using LangChain’s RecursiveCharacterTextSplitter:

// Import the necessary class from LangChain

import { RecursiveCharacterTextSplitter } from 'langchain/text_splitter';

// Function to split a document into smaller chunks

const splitDocument = async (pathToDocument) => {

// Read the document content (assuming it's a text file)

const text = (await fs.readFile(pathToDocument)).toString();

// Create a text splitter with specified chunk size and overlap

const splitter = new RecursiveCharacterTextSplitter({

chunkSize: 250, // Each chunk will have 250 characters

chunkOverlap: 40 // 40 characters from the end of one chunk will overlap with the start of the next

});

// Split the text into chunks

const output = await splitter.createDocuments([text]);

// Return only the content of each chunk (pageContent contains the actual text)

return output.map(document => document.pageContent);

};

// Example usage: Split a document called 'handbook.txt'

const handbookChunks = await splitDocument('handbook.txt');

// The handbookChunks array will now contain each chunk of text.Adjust chunkSize and chunkOverlap to your needs, if you expect short questions it's ideal to have short chunks in the database and vice versa.

The Different Text Splitters

There are various ways to split text, and LangChain offers different types of splitters based on your needs:

- RecursiveCharacterTextSplitter: Splits text while ensuring the chunks don’t break meaningful sentences or words. It’s good for documents with structured text.

- CharacterTextSplitter: Splits text based on character count, which is simple but can split important sentences in awkward places.

- TokenTextSplitter: Splits based on tokens rather than characters, which can be useful when you care more about the number of words than individual characters.

- MarkdownTextSplitter: Specially designed for splitting markdown-formatted text, preserving the structure of headings, lists, etc.

- LatexTextSplitter: Designed to split LaTeX documents, ensuring mathematical expressions and formatting are preserved correctly.

For example, if you’re splitting a markdown document, you would use the MarkdownTextSplitter to ensure the formatting stays intact.

Understanding Embeddings with a Real-Life Example

Imagine you’re organizing a big family reunion, and you want to find people who have similar interests. Each family member has different hobbies like gardening, cooking, hiking, etc. Instead of comparing each person by their exact hobby (e.g., “gardening”), you want to compare them based on the type of hobby they enjoy (outdoor activities, creative work, etc.) to find similar groups of people.

This is what embeddings do in the world of AI.

We will use the word vector throughout this article. The simplest explanation of a vector is that it’s like an arrow that points in a certain direction and has a certain length.

In computers and AI, a vector is just a list of numbers that represent something (like a word or a piece of information). The direction and length of this “arrow” tell us how similar or different things are from each other.

Real-Life Example: Family Hobbies

Let’s say we have three people with the following hobbies:

- Alice loves gardening.

- Bob enjoys hiking.

- Charlie is into cooking.

We can think of each hobby as being part of a larger category. For example:

- Gardening and hiking are both outdoor activities.

- Cooking is a creative activity.

Instead of comparing people by their exact words, we compare them based on the meaning or category of their hobbies.

How Embeddings Work

When we create embeddings for texts, we turn words into numbers (vectors) that represent the meaning behind the text, similar to how we grouped family members by the type of hobbies they like.

For example:

- Alice’s vector for gardening might look like:

[0.8, 0.1] - Bob’s vector for hiking might be:

[0.7, 0.2] - Charlie’s vector for cooking could be:

[0.1, 0.9]

Each number in these vectors represents a “dimension” of meaning. For simplicity:

- The first number in the vector could represent how much this activity relates to outdoor activities.

- The second number could represent how much it relates to creative activities.

Here’s the vector representation of hobbies:

- Gardening (Alice):

[0.8, 0.1](mostly outdoor, a little creative) - Hiking (Bob):

[0.7, 0.2](mostly outdoor) - Cooking (Charlie):

[0.1, 0.9](mostly creative)

Even though gardening and hiking are different activities, their vectors are close to each other because they’re both outdoor activities. Cooking, however, has a very different vector because it’s mostly a creative activity.

Matching with Embeddings

Now, let’s say you want to find someone similar to Bob, who enjoys hiking. You would take Bob’s vector [0.7, 0.2]and compare it to Alice’s and Charlie’s vectors.

The cosine similarity between Bob and Alice’s vectors (hiking vs. gardening):

Similarity = (0.7 * 0.8) + (0.2 * 0.1) = 0.56 + 0.02 = 0.58The similarity score is 0.58, meaning Alice and Bob are fairly similar (both enjoy outdoor activities).

Now compare Bob’s vector with Charlie’s (hiking vs. cooking):

Similarity = (0.7 * 0.1) + (0.2 * 0.9) = 0.07 + 0.18 = 0.25The similarity score is 0.25, meaning Bob and Charlie are much less similar because they have different types of hobbies.

This is exactly how embeddings work in AI. Instead of comparing text word-for-word, embeddings look at the underlying meaning and give us a similarity score based on that.

Example: Short Array Embeddings in AI

Let’s say we have the following text snippets, and we want to compare them to a query using embeddings:

- Query: “Unapproved expenses”

- Text A: “Unapproved expenses will not be reimbursed.”

- Text B: “Unauthorized purchases won’t be covered.”

- Text C: “Employees are encouraged to submit travel reports.”

If we create embeddings for these texts, they might look like:

- Query: [0.85, 0.05]

- Text A (Expenses): [0.8, 0.1]

- Text B (Unauthorized purchases): [0.75, 0.15]

- Text C (Travel reports): [0.3, 0.7]

Here’s what happens when we compare the query to the stored vectors:

Cosine similarity similarity between Query and A (both about expenses):

Similarity = 0.85 * 0.8 + 0.05 * 0.1 = 0.68 + 0.005 = 0.685Similarity between Query and B (expenses vs. unauthorized purchases):

Similarity = 0.85 * 0.75 + 0.05 * 0.15 = 0.6375 + 0.0075 = 0.645Similarity between Query and C (expenses vs. travel):

Similarity = 0.85 * 0.3 + 0.05 * 0.7 = 0.255 + 0.035 = 0.29Since Text A has the highest similarity score (0.685), the system would return Text A as the best match for the query. Text B is somewhat related (0.645), but Text C (0.29) is much less relevant because it’s about travel.

Create Embedding

Once you have chunks of your document, the next step is to create embeddings for each one. Embeddings capture the meaning of the text and convert it into numerical values so the AI can work with them.

You can download the model from here.

Here’s an example of creating embeddings using the Llama model:

// Import the Llama model loader

import { getLlama } from "node-llama-cpp";

import path from "path";

// Load the Llama model

const llama = await getLlama();

// Specify the path to the model file

const model = await llama.loadModel({

modelPath: path.join("./models", "Meta-Llama-3.1-8B-Instruct-Q4_K_M.gguf") // Make sure you have the model downloaded locally

});

// Create a context for generating embeddings

const context = await model.createEmbeddingContext();

// Function to create embeddings for each document chunk

const embedDocuments = async (documents) => {

const embeddings = [];

// For each document chunk, create its embedding

await Promise.all(

documents.map(async (document) => {

const embedding = await context.getEmbeddingFor(document); // Get the embedding for the document

embeddings.push({ content: document, embedding: embedding.vector }); // Store the chunk and its embedding

console.debug(`${embeddings.length}/${documents.length} documents embedded`); // Debug log for progress tracking

})

);

// Return all embeddings

return embeddings;

};

// Example usage: Create embeddings for all chunks of the handbook

const documentEmbeddings = await embedDocuments(handbookChunks);In this case, the embedDocuments function creates an embedding for each chunk of the handbook.

What Are Vector Databases?

Now that we have our embeddings, we need somewhere to store and search through them efficiently. This is where vector databases come in. A vector database stores embeddings (which are essentially large arrays of numbers) and allows us to search them by similarity.

Let’s break this down:

- Chroma: An open-source vector database that’s fast and easy to use, perfect for quick searches within embeddings.

- Pinecone: A managed service that focuses on scalability, making it a good choice when handling a huge number of embeddings.

- Supabase: While not a dedicated vector database, Supabase allows you to store embeddings in a relational database with a lot of flexibility. You can use it for smaller projects where you also want the ability to store and manage other types of data.

We will use Supabase in this example.

Go to supabase.com and click on “Start your project”.

You will see the login page. If you do not already have an account follow the link “Sign up now”. An easy way is to use your existing GitHub account and click on “Continue with Github”.

When you reach the Dashboard click on “New Project”. It will first take you to the form to “Create a new organization” and afterward to “Create a new project”, you can choose any name, password and region you prefer.

Next, select Database from the side menu as shown below.

Please also enable the vector extension. Navigate under Database to Extensions. Search for a vector and enable that extension, like shown below:

Next, go to Project Settings > API and copy the Project URL & Project API Key:

Create a .env file in the rag folder and paste the values into it:

# .env file

SUPABASE_URL=your_supabase_url

SUPABASE_API_KEY=your_supabase_api_keyNow we add the database connection to our code:

// Import dotenv to load environment variables and Supabase client

import 'dotenv/config';

import { createClient } from '@supabase/supabase-js';

// Create a Supabase client using the URL and API key from the .env file

const supabase = createClient(process.env.SUPABASE_URL, process.env.SUPABASE_API_KEY);Go to SQL Editor and add the following CREATE TABLE statement:

CREATE TABLE handbook_docs (

id bigserial primary key, // Automatically incrementing ID

content text, // The text content of the chunk

embedding vector(4096) // The vector embedding (4096 dimensions)

)

Next, we add an insert call to our script to insert our embedding into our vector database:

// Function to insert document embeddings into the Supabase table

const insertEmbeddings = async (embeddings) => {

// Insert embeddings into the 'handbook_docs' table

const { error } = await supabase

.from('handbook_docs')

.insert(embeddings); // Insert all embeddings at once

// Handle any errors that occur during the insertion

if (error) {

console.error('Error inserting embeddings:', error);

} else {

console.log('Embeddings inserted successfully!');

}

};

// Example usage: Insert embeddings into the database

await insertEmbeddings(documentEmbeddings);After we run our script we can go to Table Editor and click on our table handbook_docs to see the uploaded data.

That’s it we implemented the Indexing Pipeline, congratiolation.

Summary

In this article, we explored how to build an open-source indexing pipeline using JavaScript and various tools like LangChain, Node-Llama-CPP, and Supabase. We walked through the process of splitting a large document into manageable chunks, creating embeddings to capture the meaning of each chunk, and storing these embeddings in a vector database for efficient retrieval. This forms the foundation of a Retrieval-Augmented Generation (RAG) system, allowing us to query documents based on semantic similarity rather than exact word matches. By following these steps, you now have the tools to build your own RAG pipeline and enhance the performance of AI-powered search in your applications.